Cybersecurity Snapshot: As ChatGPT Fire Rages, NIST Issues AI Security Guidance

Learn all about NIST’s new framework for artificial intelligence risk management. Plus, how organizations are balancing AI and data privacy. Also, check out our ad-hoc poll on cloud security. Then read about how employee money-transfer scams are on the upswing. And much more!

Dive into six things that are top of mind for the week ending Feb. 3.

1 - Amid ChatGPT furor, U.S. issues framework for secure AI

Concerned that makers and users of artificial intelligence (AI) systems – as well as society at large – lack guidance about the risks and dangers associated with these products, the U.S. National Institute of Standards and Technology (NIST) is stepping in.

With its new “Artificial Intelligence Risk Management Framework,” NIST hopes to create awareness about AI products’ unique risks, such as their vulnerability to be unduly influenced and manipulated through tampering with the data their algorithms are trained on. They can also be unduly influenced by arbitrary societal dynamics.

While the framework was under construction for 18 months and its recommendations aren’t mandatory, its arrival is quite timely. The launch late last year of the generative AI ChatGPT chatbot has triggered feverish discussions globally about AI benefits and downsides.

The new framework is intended to help AI system designers, developers and users address and manage AI risks via “flexible, structured and measurable” processes, according to NIST. “It offers a new way to integrate responsible practices and actionable guidance to operationalize trustworthy and responsible AI,” NIST Director Laurie E. Locascio said in a statement.

NIST, which started working on the framework 18 months ago, expects to revise it periodically and plans to launch the “Trustworthy and Responsible AI Resource Center” to assist organizations in putting the framework into practice.

To get all the details, check out the full 48-page framework document, a companion playbook, the launch press conference, statements from interested individuals and organizations and a roadmap for future work, along with coverage from Bloomberg, VentureBeat and TechPolicy.

For more information about AI risks and about the promise and peril of ChatGPT specifically:

- “Machine Generated Text: A Comprehensive Survey of Threat Models and Detection Methods” (American University and University of Ottawa researchers)

- “What is generative AI?” (McKinsey)

- “ChatGPT Artificial Intelligence: An Upcoming Cybersecurity Threat?” (DarkReading)

- “Cyber Insights 2023: Artificial Intelligence” (SecurityWeek)

- “ChatGPT: Hopes, Dreams, Cheating and Cybersecurity” (GovTech)

VIDEOS

Introduction to the NIST AI Risk Management Framework (NIST)

What You Need to Know About ChatGPT and How It Affects Cybersecurity (SANS Institute)

2 - And continuing with the AI topic …

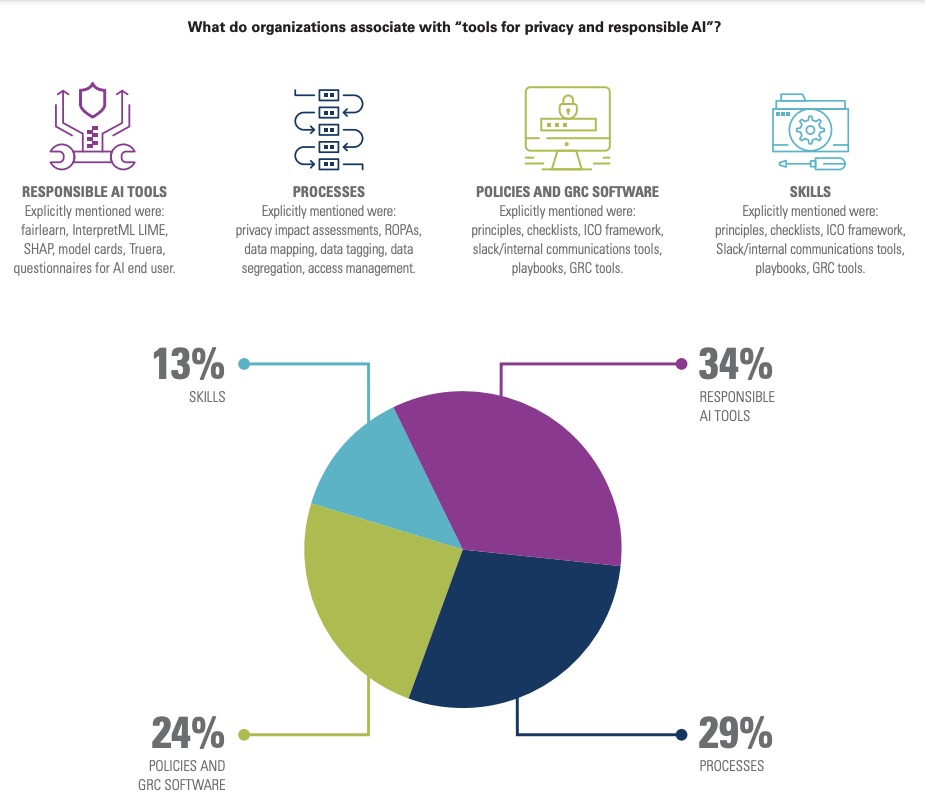

So while NIST aims to inform and improve AI risk management going forward, another study probed how organizations have so far tackled AI governance as it overlaps with data privacy management.

Given that AI systems feed off of personal data, the International Association of Privacy Professionals (IAPP) looked at organizations’ maturity level in using AI responsibly and securely in its “Privacy and AI Governance Report”.

The report, which surveyed an undisclosed number of privacy, policy, legal and technical AI experts in North America, Europe and Asia, found that:

- Most surveyed organizations (70%) are at some point in the process of including responsible AI in their governance, while 10% haven’t started and 20% have already implemented these practices.

- The top AI risks worrying respondents were privacy; harmful bias; bad governance; and lack of legal clarity.

- Half of organizations are building responsible AI governance on top of existing, mature privacy programs.

- Overall, it’s a struggle for organizations to find and acquire appropriate tools to address responsible AI, such as for detecting bias in AI applications.

(Source: “Privacy and AI Governance Report” from the International Association of Privacy Professionals (IAPP), January 2023)

The report recommends that to advance responsible AI, organizations should focus on:

- Gaining leadership support

- Championing ethical uses of data

- Growing and improving training on the use of AI

- Implementing AI ethics in the same way as privacy by design and default

For more information:

- “Responsible AI is a top management concern, so why aren’t organizations deploying it?” (VentureBeat)

- “5 ways to avoid artificial intelligence bias with responsible AI” (World Economic Forum)

- “Responsible AI has a burnout problem” (MIT Technology Review)

- “New report documents the business benefits of ‘responsible AI’” (MIT Sloan School)

VIDEOS

Responsible AI: Tackling Tech's Largest Corporate Governance Challenges (UC Berkeley)

Exploring AI and data science ethics review processes (Ada Lovelace Institute)

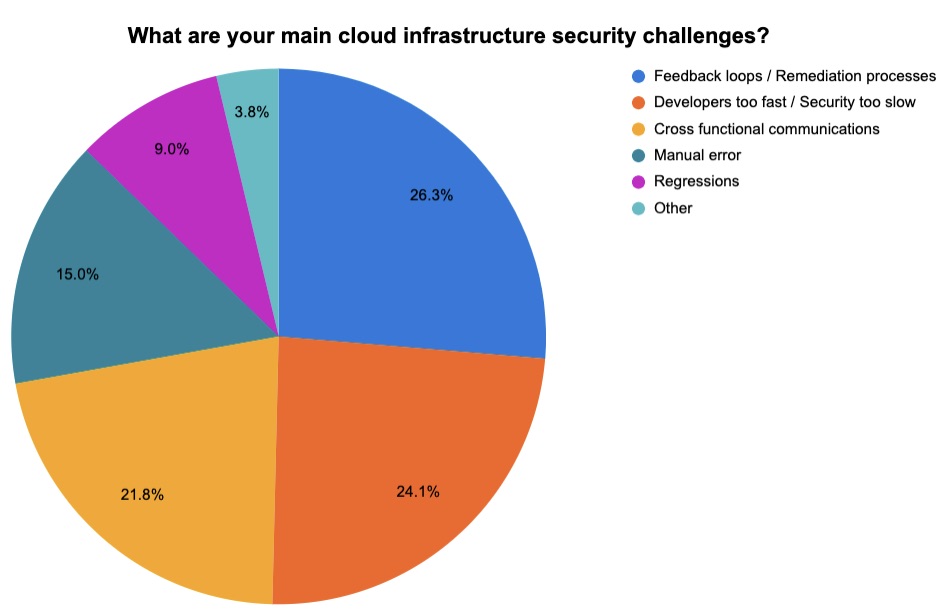

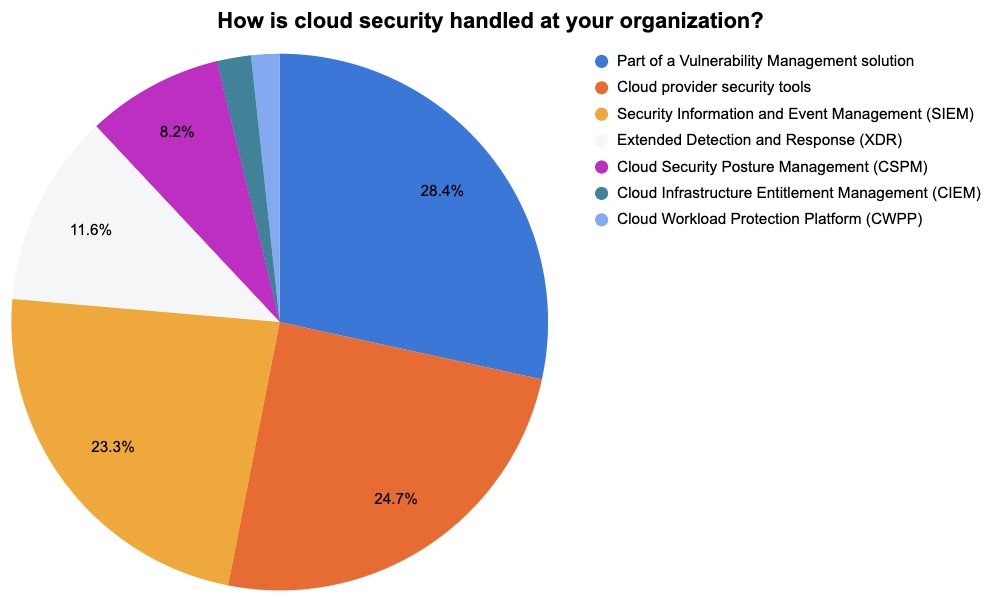

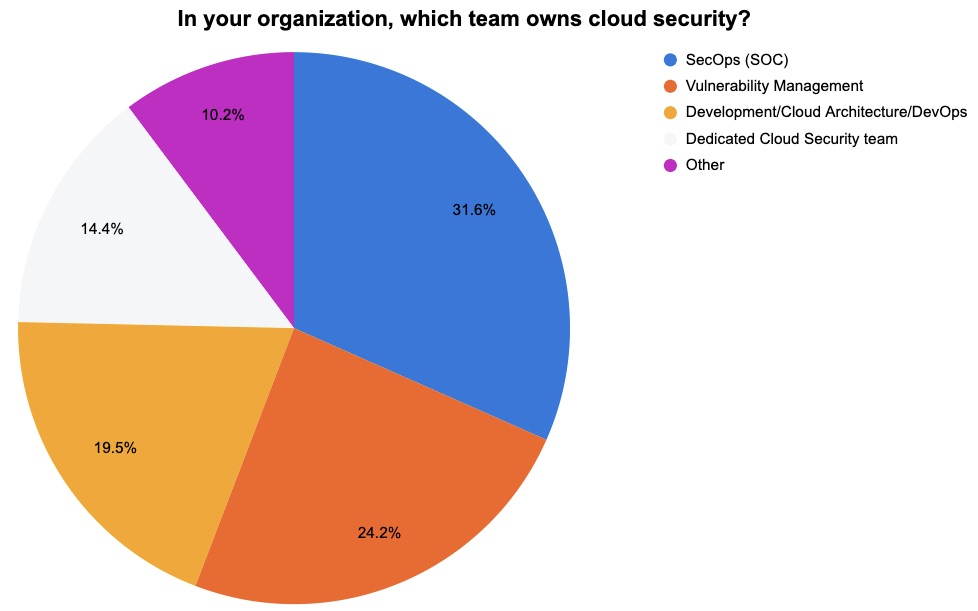

3 - A penny for your thoughts on cloud security

At our recent webinars “The DevSecOps Guide to Clean, Compliant and Secure Infrastructure as Code” and “Tenable.io Customer Update: January 2023,” we polled attendees about a few aspects of their cloud security programs. Check out what they said!

(133 respondents polled by Tenable, January 2023)

(292 respondents polled by Tenable, January 2023)

(215 respondents polled by Tenable, January 2023)

For more information, check out these Tenable resources:

- “Cloud Security: 3 Things InfoSec Leaders Need to Know About the Shared Responsibility Model”

- “The Four Phases of Cloud Security Maturity”

- “CNAPP: What Is It and Why Is It Important for Security Leaders?”

- “SANS 2022 DevSecOps Survey: Creating a Culture to Significantly Improve Your Organization’s Security Posture”

- “Cloud Security Roundtable: Scaling Cloud Adoption without Sacrificing Security Standards”

4 - CISA preps new office for software supply chain security

Need help implementing security best practices for your software supply chain? The U.S. Cybersecurity and Infrastructure Security Agency (CISA) plans to open an office whose charter will be to put guidance, requirements and recommendations for software supply chain security into action.

That’s according to an article from Federal News Network (FNN), which interviewed Shon Lyublanovits, a former General Services Administration official who will spearhead the new cyber supply chain risk management office (C-SCRM).

“We’ve got to get to a point where we move out of this idea of just thinking broadly about C-SCRM and really figuring out what chunks I want to start to tackle first, creating that roadmap so that we can actually move this forward,” Lyublanovits told FNN.

The new office’s resources will be aimed not just at helping U.S. federal government agencies, but also state and local governments, as well as private sector organizations.

News about the new office follows the publication last year of three software supply chain security guides by CISA, the National Security Agency and the Office of the Director of National Intelligence: One for software suppliers, one for software developers and one for software customers.

For more information about software supply chain security:

- “What is supply chain security?” (TechTarget)

- “Best practices for boosting supply chain security” (ComputerWeekly)

- “Software Supply Chain Best Practices” (Cloud Native Computing Foundation)

- “Software Supply Chain Security Guidance” (U.S. National Institute of Standards and Technology)

- “The Open Source Software Security Mobilization Plan” (The Linux Foundation and The Open Source Security Foundation)

VIDEOS

How to secure your software supply chain from dependencies to deployment (Google Cloud)

The Secure Software Supply Chain (Strange Loop Conference)

New Guidelines for Enhancing Software Supply Chain Security Under EO 14028 (RSA Conference)

5 - Employee money-transfer scams shoot up

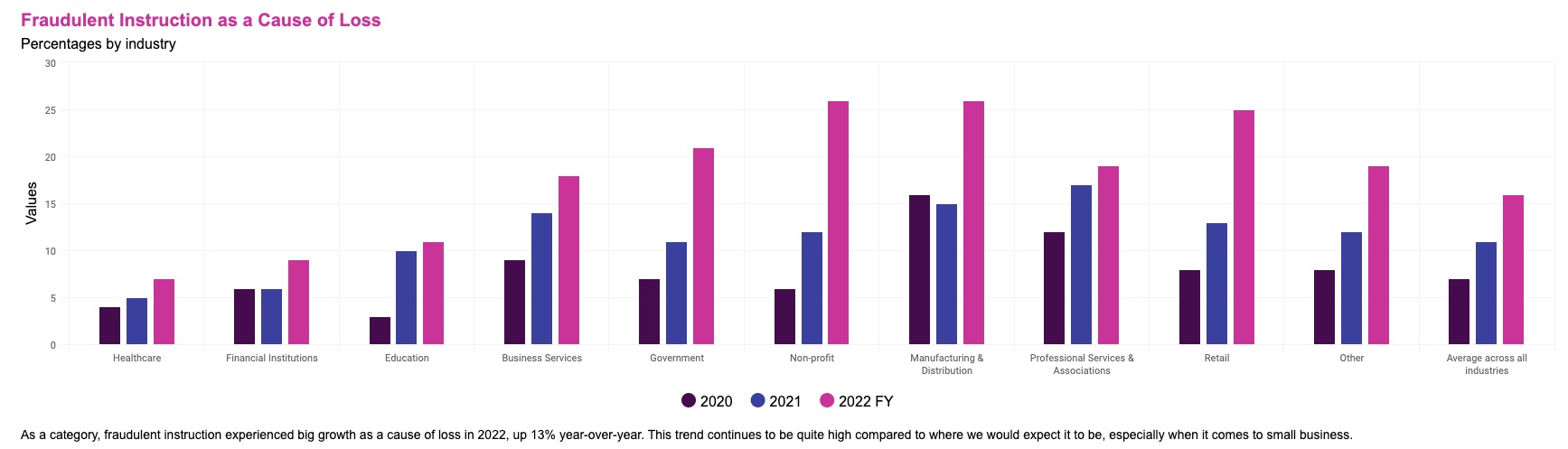

Cyber scams in which employees get duped into transferring money to a cyber thief went up in 2022, highlighting the importance of cybersecurity awareness training in workplaces.

The finding comes from insurer Beazley Group’s latest “Cyber Services Snapshot.”. Published this week, the report states that, as a cause of loss, “fraudulent instruction” scams went up 13% compared with 2021.

(Source: Beazley Group’s “Cyber Services Snapshot” report, January 2023)

(Source: Beazley Group’s “Cyber Services Snapshot” report, January 2023)

Unsurprisingly, Beazley Group expects threat actors to continue pursuing this type of attack aggressively in 2023. It recommends that organizations:

- Better educate employees about cyber fraud tactics, such as spoofed emails

- Establish processes for verifying payment requests

- Enhance logging practices

- Invest in identity and access management

The report also warns that as threat actors up the level of sophistication of their attacks, it’s critical for businesses to harden the configuration of their IT tools, in particular their public cloud services. Beazley advises the following:

- Don’t rely on default security configurations

- Learn about and leverage your products’ and services’ advanced security settings

- Check with your managed service providers to ensure they’re fine-tuning security configurations as well

For more information about the report, check out coverage from Insurance Business, Global Reinsurance, Insurance Business Mag and Strategic Risk.

6 - Try to see it my way: Improving business-security communications

And finally, here are some tips for security leaders who want to communicate better with their business counterparts.

In the blog “Tips for Effective Negotiations with Leadership,” IANS Research makes a variety of recommendations, including:

- Instead of hammering away at why policies must be adhered to or else, security leaders should instead speak about the underlying strategies and how they serve as avenues to accomplish business goals.

- Strive to understand the business needs behind the issues being discussed, and align security issues to broader business goals, to show that you’re both rowing in the same direction and with the same purpose.

- Highlight how security controls and capabilities can generate a competitive advantage for the business, such as putting the organization on better footing for deals that require specific security certifications or compliance with regulations.

For more information about this topic:

- “How to Become a Business-Aligned Security Leader” (Tenable)

- “CxOs Need Help Educating Their Boards” (Cloud Security Alliance)

- “7 mistakes CISOs make when presenting to the board” (CSO Magazine)

- "Cybersecurity on the board: How the CISO role is evolving for a new era" (TechMonitor)

- “A Recipe for Success: CISOs Share Top Tips for Successful Board Presentations” (Tenable)

- Cybersecurity Snapshot

- Federal

- Government

- NIST