Cybersecurity Snapshot: Want to Deploy AI Securely? New Industry Group Will Compile AI Safety Best Practices

A group that includes the Cloud Security Alliance, CISA and Google is working to compile a comprehensive collection of best practices for secure AI use. Meanwhile, check out a draft of secure configuration recommendations for the Google Workspace suite. Plus, MITRE plans to release a threat model for embedded devices used in critical infrastructure. And much more!

Dive into six things that are top of mind for the week ending December 15.

1 - CSA-led coalition to document secure AI best practices

Have you been tasked with ensuring your company’s use of AI is secure and compliant, and feel you need guidance? Comprehensive guidelines are forthcoming from the AI Safety Initiative, a new group led by the Cloud Security Alliance that was launched this week.

Partners include government agencies, such as the U.S. Cybersecurity and Infrastructure Security Agency (CISA); major AI vendors, such as Amazon, Anthropic, Google, Microsoft and OpenAI; and members of academia.

With an initial focus on generative AI, the AI Safety Initiative has four main areas of focus:

- Craft AI best practices and make them freely available

- Empower organizations to adopt AI confidently and quickly with the knowledge they’re doing so safely, ethically and compliantly

- Complement government AI regulations with industry self-regulation

- Proactively address ethical and societal issues bound to emerge as AI technology advances

The partnership can help reduce the risk of misuse by sharing best practices for managing the lifecycle of AI capabilities and ensuring that “they are designed, developed, and deployed to be safe and secure,” CISA Director Jen Easterly said in a statement.

The AI Safety Initiative already has more than 1,500 participants, and these four core working groups have started meeting:

- AI Technology and Risk Working Group

- AI Governance & Compliance Working Group

- AI Controls Working Group

- AI Organizational Responsibilities Working Group

Interested in joining? Fill out this form.

To get more details:

- Read the group’s announcement “Artificial Intelligence Leaders Partner with Cloud Security Alliance to Launch the AI Safety Initiative”

- Check out the AI Safety Initiative’s home page

For more information about using AI securely:

- “13 Principles for Using AI Responsibly” (Harvard Business Review)

- “OWASP AI Security and Privacy Guide” (OWASP)

- “Guidelines for secure AI system development” (U.K. National Cyber Security Centre)

- “8 Questions About Using AI Responsibly, Answered” (Harvard Business Review)

- “Adopting AI Responsibly: Guidelines for Procurement of AI Solutions by the Private Sector” (World Economic Forum)

VIDEOS

Ultimate Guide to AI for Businesses (TechTarget)

Why AI developers say regulation is needed to keep AI in check (PBS Newshour)

2 - CISA releases config baselines for Google cloud apps

If your organization uses the Google Workspace (GWS) suite of cloud productivity apps, there’s fresh guidance in the works on how to properly configure these services.

CISA released this week a draft of its “Secure Cloud Business Applications (SCuBA) Google Workspace (GWS) Secure Configuration Baselines” which offer “minimum viable” configurations for nine GWS services.

CISA also made available the assessment tool ScubaGoggles, which CISA co-developed with Google and which is designed to verify that your GWS configurations conform with the SCuBA policies.

While the recommendations and the tool are intended for use by U.S. federal government agencies, any organization in the public and private sectors can access them.

Here’s a small sample of the GWS configuration recommendations:

- Gmail

- Disable users’ ability to delegate access to their mailboxes to others

- Enable DomainKeys Identified Mail (DKIM) to help prevent email spoofing

- Enable protections against suspicious email attachments and scripts

- Google Calendar

- Limit externally-shared calendars to show only “free / busy” information of users

- Warn users whenever they invite guests from outside their domain to meetings

- Disable interoperation between Google Calendar and Microsoft Exchange unless required by organization

- Google Chat

- Enable chat history retention in order to maintain a record of chats for legal or compliance purposes

- Disable external file sharing

- Restrict external chats to domains that have been allowlisted

- Google Drive and Docs

- Disable sharing outside of the organization’s domain

- Allow users to create new shared drives

- Disable access to Drive from the Drive Software Development Kit (SDK) API

The other five guides are for Groups for Business, Google Common Controls, Google Classroom, Google Meet and Google Sites.

CISA is fielding comments about the GWS configurations until January 12, 2024.

“Once finalized and fully implemented, the GWS baselines will reduce misconfigurations and enhance the protection of sensitive data, bolstering overall cybersecurity resilience,” reads a CISA statement.

CISA earlier this year released a similar set of configuration recommendations for Microsoft 365.

To get more details, check out:

- The home page of the “Secure Cloud Business Applications (SCuBA) Project,” where you’ll find the Microsoft 365 and GWS configuration recommendations, along with other resources

- The blog “CISA Seeks Public Comment on Newly Developed Secure Configuration Baselines for Google Workspace”

- The alert “CISA Releases SCuBA Google Workspace Secure Configuration Baselines for Public Comment”

More resources on cloud configuration best practices:

- “Hardening and monitoring cloud configuration” (SC Magazine)

- “Cloud Security Roundtable: Scaling Cloud Adoption without Sacrificing Security Standards” (Tenable webinar)

- “Security Guidance for Critical Areas of Focus in Cloud Computing” (Cloud Security Alliance)

- “Top 7 cloud misconfigurations and best practices to avoid them” (TechTarget)

- “New cloud security guidance: it's all about the config” (U.K. NCSC)

3 - MITRE preps threat model for embedded devices in critical infrastructure

Are you involved with making or securing embedded devices used in critical infrastructure environments? If so, you may be interested in a new threat modeling framework designed specifically for these systems that is slated for release in early 2024.

That’s according to an announcement this week from MITRE, which is developing the new framework, called EMB3D Threat Model, with several partners. The motivation behind it? These devices are often buggy and lack security controls.

The EMB3D Threat Model will aim to provide “a common understanding of the threats posed to embedded devices and the security mechanisms required to mitigate them,” MITRE said in a statement.

The framework is aimed at device vendors, manufacturers, asset owners, security researchers, and testing organizations. Anyone interested in reviewing the unreleased version of EMB3D Threat Model can write to MITRE at: [email protected].

To get more details, check out the framework’s announcement “MITRE, Red Balloon Security, and Narf Announce EMB3D – A Threat Model for Critical Infrastructure Embedded Devices.”

For more information about embedded device security:

- “Embedded devices remain vulnerable to ransomware threats” (Embedded.com)

- “Embedded Security: How to Mitigate the Next Attack” (EE Times Europe)

- “Hardware & Embedded Systems: A little early effort in security can return a huge payoff” (NCC Group)

- “Establishing Cyber Resilience in Embedded Systems for Securing Next-Generation Critical Infrastructure” (IEEE)

4 - Cyber agencies warn about exploit of TeamCity bug

Attackers affiliated with the Russian government are targeting JetBrains’ TeamCity server software to exploit CVE-2023-42793, U.S. and international cybersecurity agencies warned this week.

Known as APT29, the Dukes, CozyBear and Nobelium/Midnight Blizzard, the cyber actors are associated with the Russian Foreign Intelligence Service (SVR). Exploiting the vulnerability allows them to bypass authorization and execute code on compromised servers.

The large-scale effort to exploit this vulnerability is global and began in September. TeamCity is a tool for developers to manage software building, compilation, testing and release. The vulnerability can allow attackers to breach software supply chain operations.

So far, however, APT29 has mostly been exploiting the vulnerability to “escalate its privileges, move laterally, deploy additional backdoors, and take other steps to ensure persistent and long-term access to the compromised network environments,” reads the 27-page joint advisory.

Organizations are encouraged to use the indicators of compromise (IOCs) in the advisory to assess if they’ve been compromised. If so, you should apply the advisory’s incident response recommendations and inform both the FBI and CISA.

For more information, check out:

- The joint alert “CISA and Partners Release Advisory on Russian SVR-affiliated Cyber Actors Exploiting CVE-2023-42793”

- The joint advisory “Russian Foreign Intelligence Service (SVR) Exploiting JetBrains TeamCity CVE Globally”

- CISA’s home page on Russia cyber threats

- JetBrains’ “CVE-2023-42793 Vulnerability in TeamCity: October 18, 2023 Update” blog

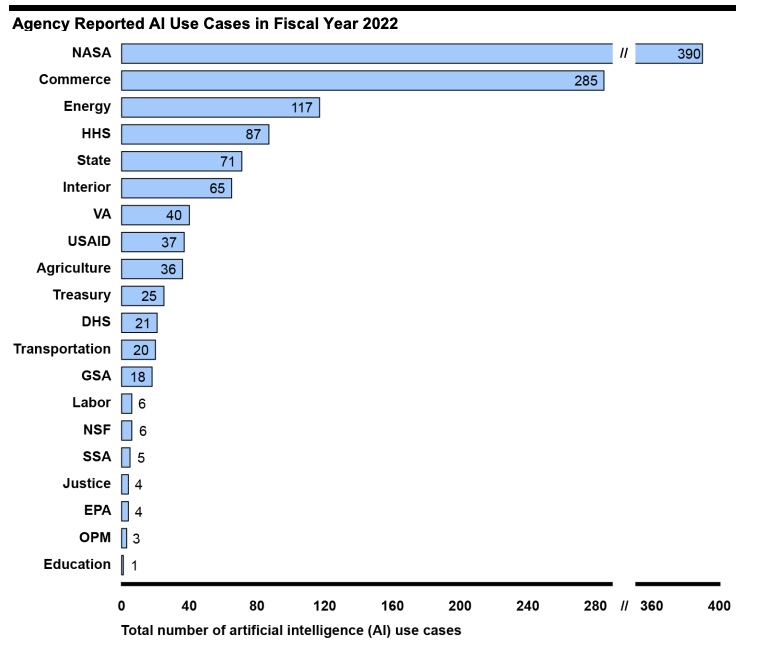

5 - GAO: About 1,200 AI use cases among U.S. gov’t civilian agencies

A new report found that AI use among U.S. civilian federal agencies is growing rapidly, but that they generally could do a better job of managing the technology’s risk.

In a 103-page report, the U.S. Government Accountability Office (GAO) detailed AI use among 20 civilian federal agencies.

Among the findings are:

- Fifteen agencies provided incomplete and inaccurate data about their AI use cases

- In general, agencies have started to comply with AI guidance and requirements, but work remains ahead to be fully compliant

- The 20 agencies listed about 1,200 AI use cases, although only about 200 of them are currently operational, while the rest are in planning stages

- Unsurprisingly, cybersecurity is one of the areas for which agencies are using AI, along with others such as large dataset reviews and drone photo analysis

- NASA ranked first on the list of use cases, with 390, followed by the Commerce Department with 285

(Source: U.S. Government Accountability Office, December 2023)

GAO also noted the lack of government-wide guidance for agencies about how to acquire and use AI. “Without such guidance, agencies can't consistently manage AI. And until all requirements are met, agencies can't effectively address AI risks and benefits,” reads a summary of the report.

For more information, read the report “Artificial Intelligence: Agencies Have Begun Implementation but Need to Complete Key Requirements.”

6 - U.K. cyber agency tackles hardware security

Earlier we touched on the security of embedded devices, but now we widen the lens to capture the entire universe of hardware systems: This week, the U.K. National Cyber Security Centre (NCSC) announced that it has launched a new chapter in its problem book devoted exclusively to hardware cybersecurity.

NCSC’s research into hardware security will focus on four areas:

- Understanding how hardware devices behave, and how to secure those behaviors

- Knowing how and to what extent a device can be trusted

- Determining what architectures help to build devices that are secure by design

- Learning how to integrate hardware devices into a secure system

To get more details:

- Read the blog “Researching the hard problems in hardware security”

- Check out the “Hardware security problems” chapter in the NCSC problem book

For more information about hardware security:

- “What is hardware security?” (TechTarget)

- “5 effective tips to enhance your hardware security” (New Electronics)

- “The Challenges of Securing Today’s Hardware Technologies (and How to Overcome Them)” (eWeek)

- “Bolster physical defenses with IoT hardware security” (TechTarget)

VIDEOS

The Future of Hardware Security (RSA Conference)

Hardware Security Following CHIPS Act Enactment: New Era for Supply Chain (RSA Conference)

- Cloud

- Cybersecurity Snapshot

- Exposure Management

- OT Security

- Threat hunting

- Vulnerability Management